Six newly identified sites in the temporal lobes may be key to how the brain extracts rich information from the human voice.

People have a remarkable ability to perceive different information from voice alone, such as a speaker’s identity, meaning, and emotional state, says Xiaoqin Wang, a professor of biomedical engineering at Tsinghua University and the head of Tsinghua Laboratory of Brain and Intelligence . “When we pick up the phone, we often know who’s calling after hearing only a few words.”

However, the question of how our brains extract and process such information is one that has eluded neuroscientists for years. Finding the answer, Wang says, could help us better understand why some people aren’t good at interpreting social cues.

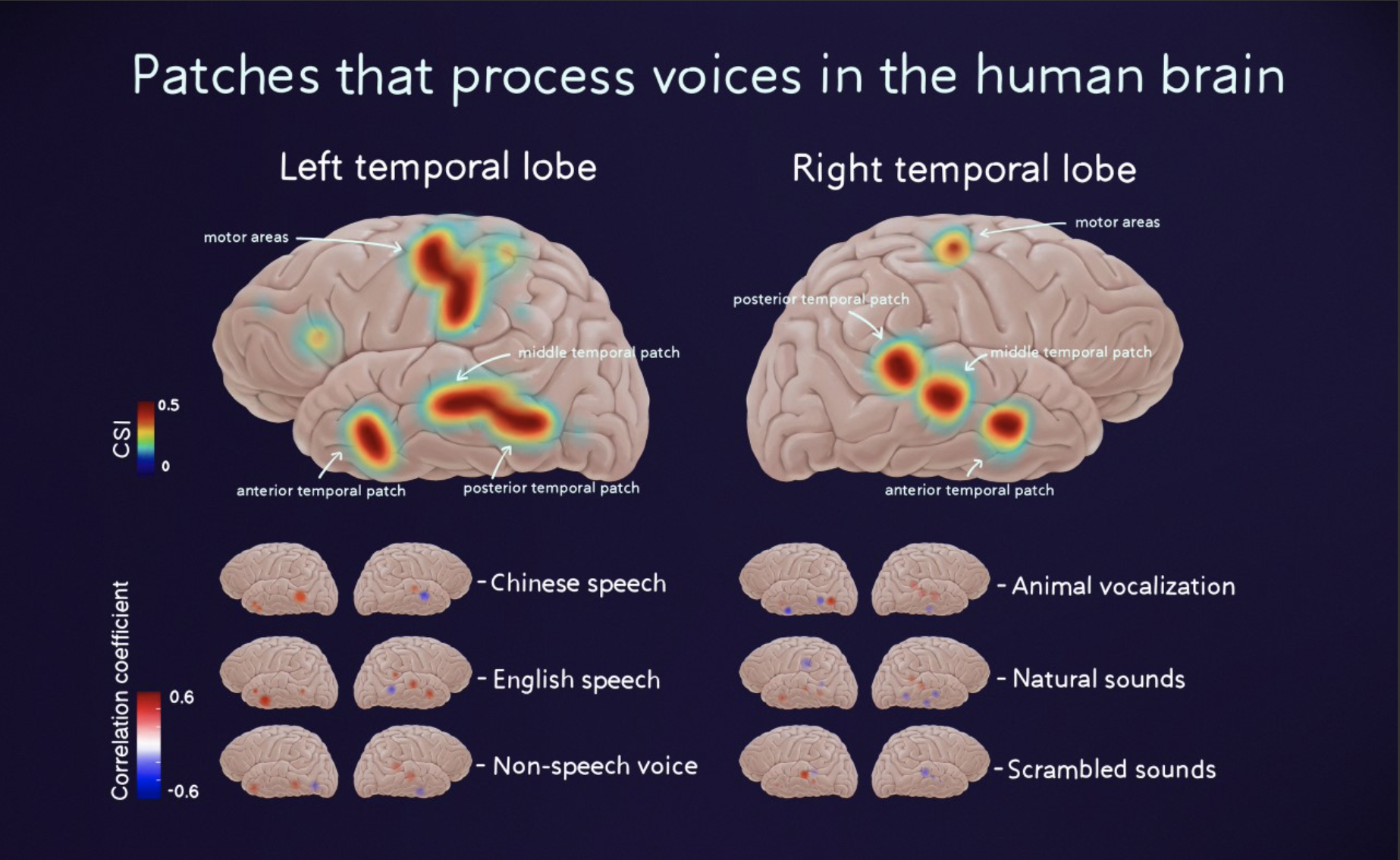

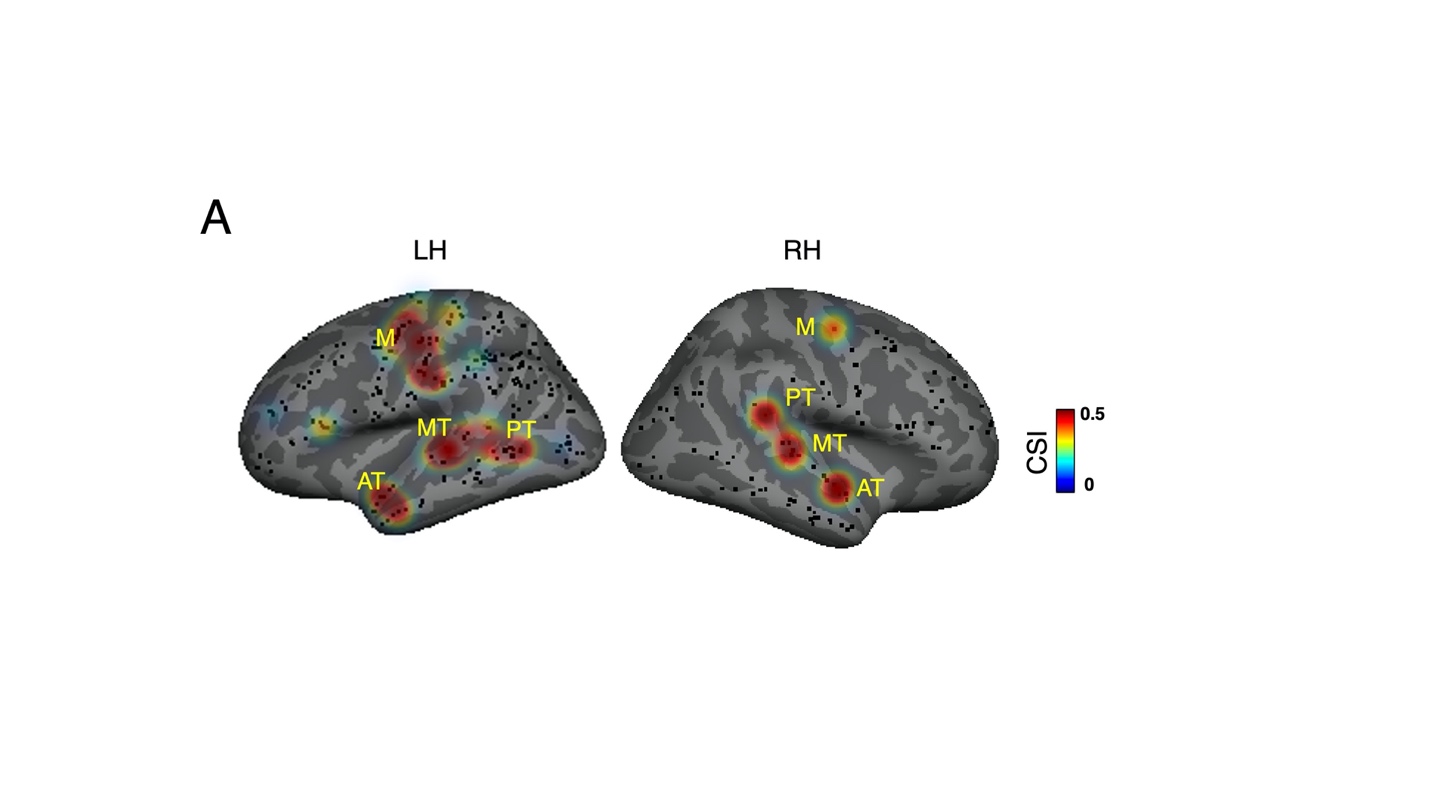

The brains of five people showed the same patterns of increased electrical activity in response to human voices measured by a catergory selectivity index (CSI). Motor areas were shown to co-activate.

In a paper published last December in the Proceedings of the National Academy of Sciences, Wang and his collaborators describe how they found specialized regions within the brain that are responsible for processing the voices we hear.

The regions — which they call ‘voice patches’ — are located in the superior temporal gyrus (STG) of the temporal lobe, the part of the brain that sits just above ear level and is associated with sensory processing. There are three patches in a row in each hemisphere.

Linked voice specialists

“The significance of the voice patches is that they form a network of connected brain regions that are specialized in processing human voices,” explains Wang. This interconnectivity remains whether a person is actively paying attention to a sound or not.

His team observed this by studying five people with epilepsy who had electrodes placed on the surface of their brains prior to surgery designed to identify the source of their seizures. The researchers measured the electrical activity of the patients’brains as they listened to six different types of sounds — half involved voices in native Chinese and English, while the other half were non-voice sounds such as animal vocalizations and noises from nature.

When listening to the former category, the patients’brain activity was significantly higher, implying that the patches are more selective for voice over non-voice sounds, says Wang. “Voices are a special class of sounds…different from environmental ones, instrumental music ones, or sounds made by animals.”

Three areas on the left temporal lobe and three areas on the right temporal lobe were shown to be preferentially activated by voices over non-voice sounds. The three areas have been dubbed the posterior temporal patch (PT), middle temporal patch (MT) and anterior temporal patch (AT). Motor areas (M) were also shown to co-activate.

To arrive at this finding, his team also took a markedly different approach to testing. Traditionally, researchers use functional magnetic resonance imaging (fMRI) or other types of scanners to see which part of the brain is being used as a patient carries out various tasks. But these techniques only measure neural activity indirectly and “have limited capacity to reveal the brain’s response to the dynamic properties of human voices in real time,” says Wang.

Instead, his team used electrocorticography, a type of electrophysiological monitoring that uses electrodes placed directly on the exposed surface of the brain to record electrical activity directly from the cerebral cortex.

The group will next study how the brain extracts and processes emotional information that voices can carry. It’s fascinating work, says Wang, because new knowledge can have tremendous implications for understanding how we speak, how we hear, and how we interact with others across a lifetime.

Reference

Zhang, Y. et al. Hierarchical cortical networks of “voice patches” for processing voices in human brain. PNAS 118 (52), e2113887118 (2021) doi: 10.1073/pnas.2113887118

Editor: Guo Lili